Lightning-Fast Event-Based Object Tracking for Automated Logistics

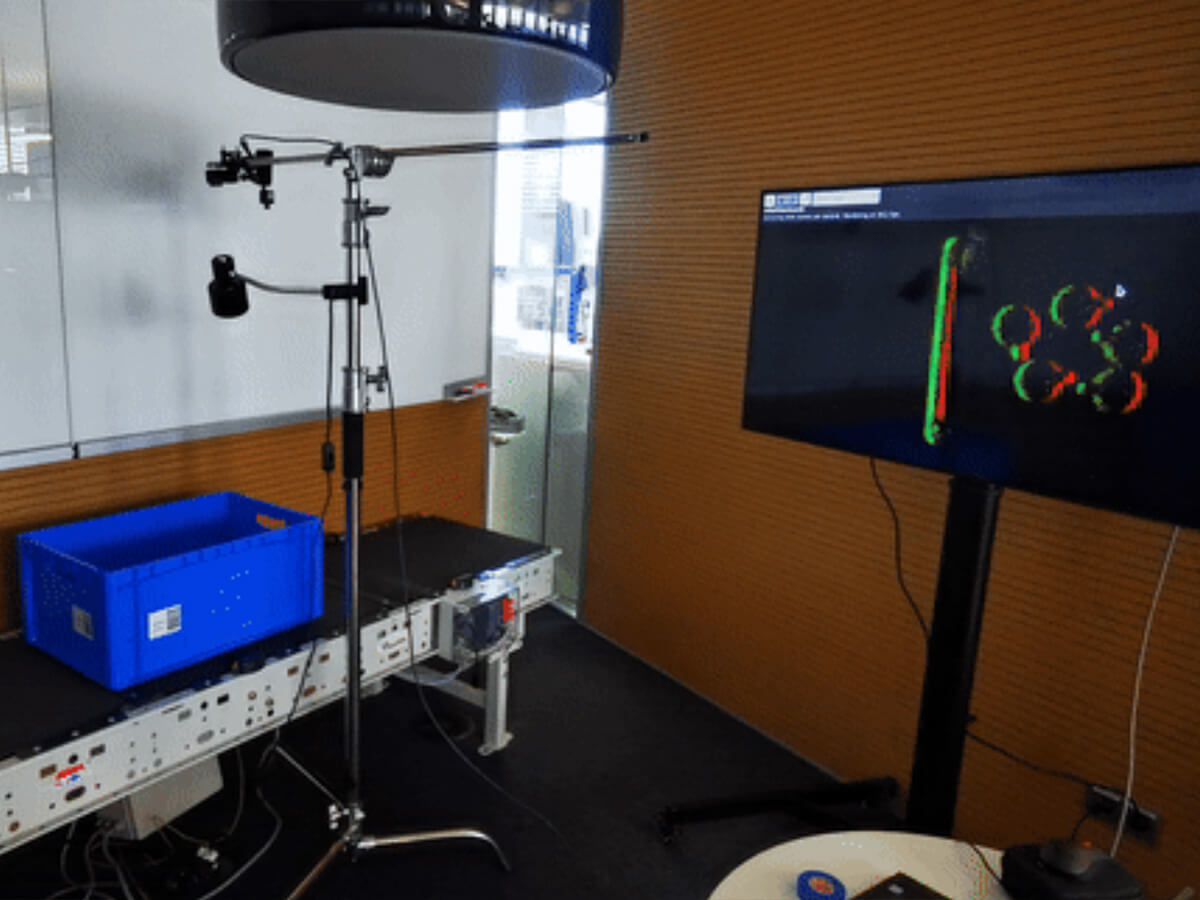

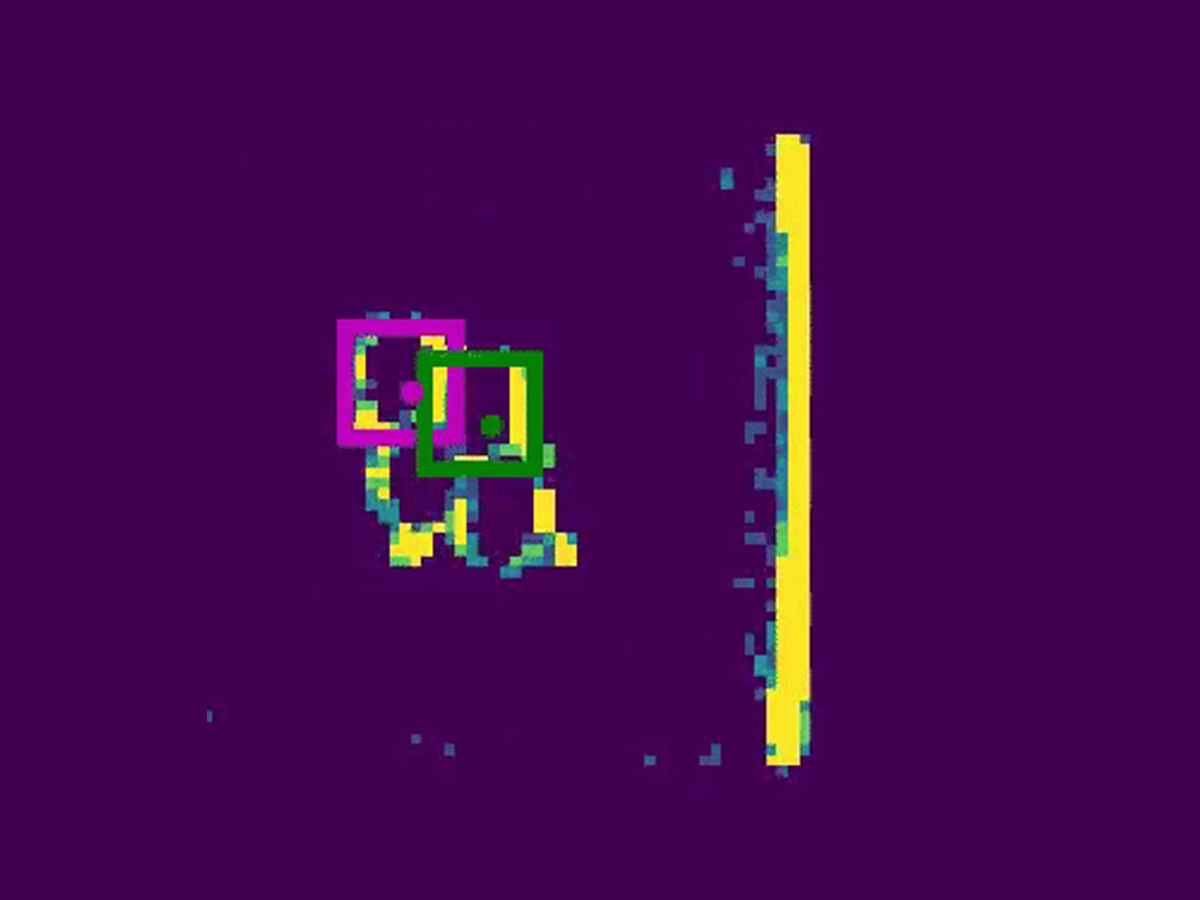

BIKINI focuses on leveraging event-based vision for robust object tracking in industrial automation. Unlike traditional frame-based video, our neuromorphic camera detects only motion events, drastically reducing data load and processing requirements. We designed and tested the system with a variety of objects — from mugs to glass bottles — moving along a conveyor belt, and developed custom spiking neural algorithms to track them in real time. The result: super low-latency, low-power tracking that enables robots to pick moving objects quickly, accurately, and reliably without stopping the belt.

The project explores how spiking neural networks (SNNs) and event cameras can enable real-time object and tote tracking with improved latency compared to current sensors for industrial automation. Techniques such as Gabor filters, proto-object saliency, and Dynamic Neural Fields were tested. The system achieved processing rates of up to ~4,800 Hz and maintained stable performance at conveyor speeds of up to 0.6 m/s. It reliably detected object position and heading but not the full 6DoF pose. The architecture demonstrated robustness to motion blur and lighting changes, delivering low-latency responses and high-frequency outputs. Orientation detection was partially successful and is planned for further improvement through learning-based methods. Limitations include detection lag in edge cases and reduced accuracy in cluttered scenes.

IBM

01.06.2023 - 31.12.2023