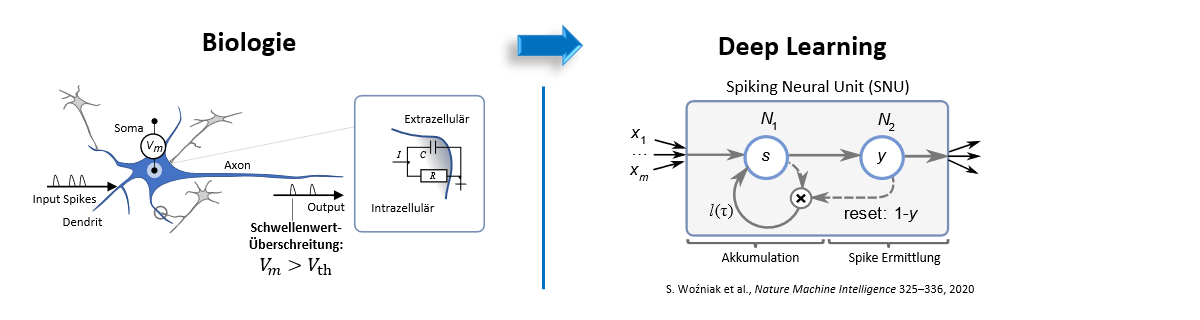

Processing visual scenes in real-time, identifying scattered signal patterns and reacting accordingly…biological organisms can do all of these things, thus making them highly-efficient information processing systems. Their capabilities are based primarily on the many millions of tiny communications units within their organism: the nerve cells, also referred to as neurons. These are highly-specialized, highly-sensitive cells that are responsible for forwarding information along the communication paths of our nervous system. A neuron accepts information with the help of electric and chemical signals, processes it and then forwards it on. All of this happens with a degree of dynamic and efficiency that makes today’s modern computer systems tip their hats out of respect.

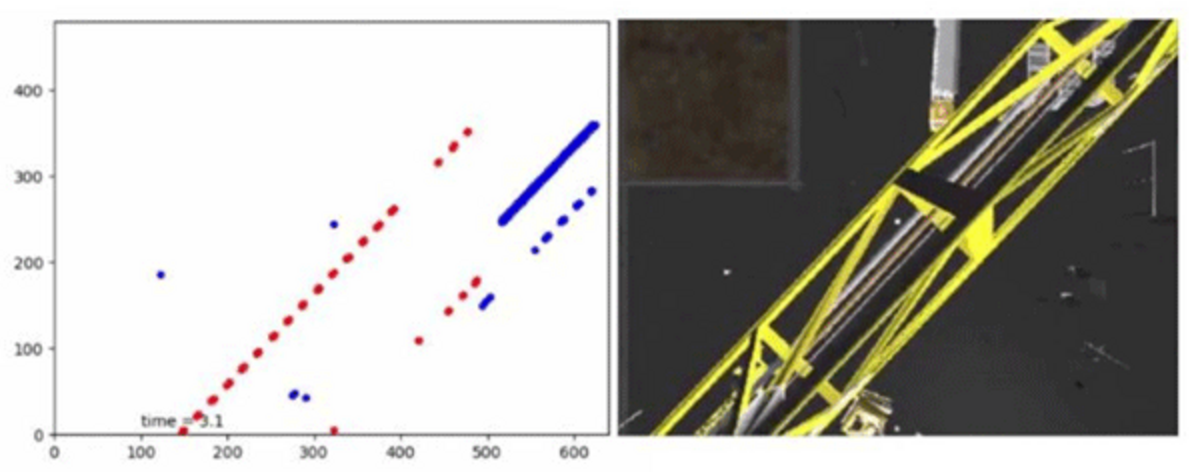

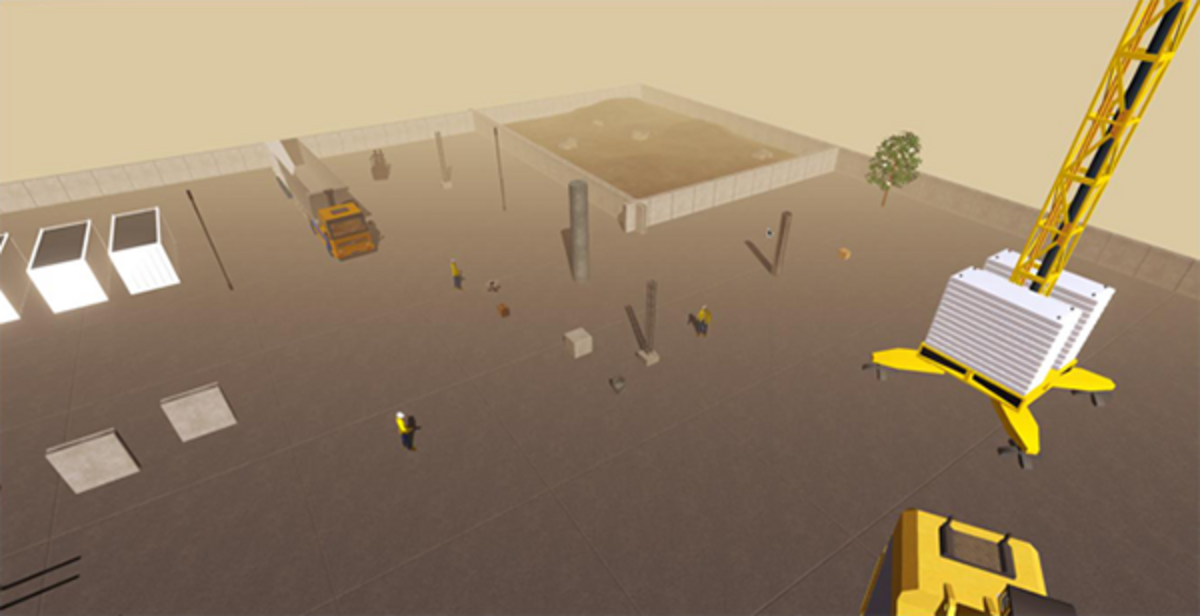

What if this dynamic and efficiency could be deciphered and ported over to computer applications? And what new fields of applications could this lead to? These questions have been raised by scientists at fortiss and IBM Research. In the joint research center Center for AI, this has resulted in a project that could eventually open up new application opportunities in the fields of object protection, asset management or vehicle traffic. The project name FAMOUS stands for “Field service and Asset Monitoring with On-board SNU and event-based vision in Simulated drones”. The goal is to employ deep learning to demonstrate the utility of IBM spiking neural units (SNU) in real image processing applications based on event cameras.